Corrects the config file name for the linuxbridge agent from /etc/neutron/plugins/ml2/linuxbridge_agent.conf to /etc/neutron/plugins/ml2/linuxbridge_agent.ini Change-Id: I00a0f99b53091d57a923a9b3a0ba17ad1e64accf Closes-Bug: #1505457 Closes-Bug: #1504431

12 KiB

Networking Option 2: Self-service networks

Install and configure the Networking components on the controller node.

Prerequisites

Before you configure networking option 2, you must configure kernel parameters to enable IP forwarding (routing) and disable reverse-path filtering.

Edit the

/etc/sysctl.conffile to contain the following parameters:net.ipv4.ip_forward=1 net.ipv4.conf.all.rp_filter=0 net.ipv4.conf.default.rp_filter=0Implement the changes:

# sysctl -p

Install the components

ubuntu

# apt-get install neutron-server neutron-plugin-ml2 \

neutron-plugin-linuxbridge-agent neutron-l3-agent neutron-dhcp-agent \

neutron-metadata-agent python-neutronclientrdo

# yum install openstack-neutron openstack-neutron-ml2 \

openstack-neutron-linuxbridge python-neutronclientobs

# zypper install --no-recommends openstack-neutron \

openstack-neutron-server openstack-neutron-linuxbridge-agent \

openstack-neutron-l3-agent openstack-neutron-dhcp-agent \

openstack-neutron-metadata-agent ipsetdebian

Install and configure the Networking components

# apt-get install neutron-server neutron-plugin-linuxbridge-agent \ neutron-dhcp-agent neutron-metadata-agentFor networking option 2, also install the

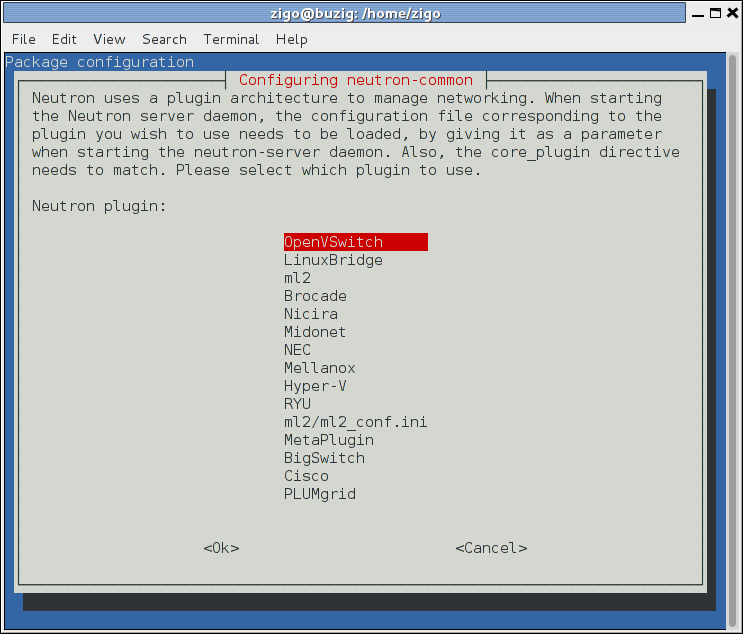

neutron-l3-agentpackage.Respond to prompts for database management, Identity service credentials, service endpoint registration, and message queue credentials.

Select the ML2 plug-in:

Note

Selecting the ML2 plug-in also populates the

service_pluginsandallow_overlapping_ipsoptions in the/etc/neutron/neutron.conffile with the appropriate values.

ubuntu or rdo or obs

Configure the server component

- Edit the

/etc/neutron/neutron.conffile and complete the following actions:In the

[database]section, configure database access:[database] ... connection = mysql+pymysql://neutron:NEUTRON_DBPASS@controller/neutronReplace

NEUTRON_DBPASSwith the password you chose for the database.In the

[DEFAULT]section, enable the Modular Layer 2 (ML2) plug-in, router service, and overlapping IP addresses:[DEFAULT] ... core_plugin = ml2 service_plugins = router allow_overlapping_ips = TrueIn the

[DEFAULT]and[oslo_messaging_rabbit]sections, configure RabbitMQ message queue access:[DEFAULT] ... rpc_backend = rabbit [oslo_messaging_rabbit] ... rabbit_host = controller rabbit_userid = openstack rabbit_password = RABBIT_PASSReplace

RABBIT_PASSwith the password you chose for theopenstackaccount in RabbitMQ.In the

[DEFAULT]and[keystone_authtoken]sections, configure Identity service access:[DEFAULT] ... auth_strategy = keystone [keystone_authtoken] ... auth_uri = http://controller:5000 auth_url = http://controller:35357 auth_plugin = password project_domain_id = default user_domain_id = default project_name = service username = neutron password = NEUTRON_PASSReplace

NEUTRON_PASSwith the password you chose for theneutronuser in the Identity service.Note

Comment out or remove any other options in the

[keystone_authtoken]section.In the

[DEFAULT]and[nova]sections, configure Networking to notify Compute of network topology changes:[DEFAULT] ... notify_nova_on_port_status_changes = True notify_nova_on_port_data_changes = True nova_url = http://controller:8774/v2 [nova] ... auth_url = http://controller:35357 auth_plugin = password project_domain_id = default user_domain_id = default region_name = RegionOne project_name = service username = nova password = NOVA_PASSReplace

NOVA_PASSwith the password you chose for thenovauser in the Identity service.(Optional) To assist with troubleshooting, enable verbose logging in the

[DEFAULT]section:[DEFAULT] ... verbose = True

Configure the Modular Layer 2 (ML2) plug-in

The ML2 plug-in uses the Linux bridge mechanism to build layer-2 (bridging and switching) virtual networking infrastructure for instances.

- Edit the

/etc/neutron/plugins/ml2/ml2_conf.inifile and complete the following actions:In the

[ml2]section, enable flat, VLAN, and VXLAN networks:[ml2] ... type_drivers = flat,vlan,vxlanIn the

[ml2]section, enable VXLAN project (private) networks:[ml2] ... tenant_network_types = vxlanIn the

[ml2]section, enable the Linux bridge and layer-2 population mechanisms:[ml2] ... mechanism_drivers = linuxbridge,l2populationWarning

After you configure the ML2 plug-in, removing values in the

type_driversoption can lead to database inconsistency.Note

The Linux bridge agent only supports VXLAN overlay networks.

In the

[ml2]section, enable the port security extension driver:[ml2] ... extension_drivers = port_securityIn the

[ml2_type_flat]section, configure the public flat provider network:[ml2_type_flat] ... flat_networks = publicIn the

[ml2_type_vxlan]section, configure the VXLAN network identifier range for private networks:[ml2_type_vxlan] ... vni_ranges = 1:1000

Configure the Linux bridge agent

The Linux bridge agent builds layer-2 (bridging and switching) virtual networking infrastructure for instances including VXLAN tunnels for private networks and handles security groups.

- Edit the

/etc/neutron/plugins/ml2/linuxbridge_agent.inifile and complete the following actions:In the

[linux_bridge]section, map the public virtual network to the public physical network interface:[linux_bridge] physical_interface_mappings = public:PUBLIC_INTERFACE_NAMEReplace

PUBLIC_INTERFACE_NAMEwith the name of the underlying physical public network interface.In the

[vxlan]section, enable VXLAN overlay networks, configure the IP address of the physical network interface that handles overlay networks, and enable layer-2 population:[vxlan] enable_vxlan = True local_ip = OVERLAY_INTERFACE_IP_ADDRESS l2_population = TrueReplace

OVERLAY_INTERFACE_IP_ADDRESSwith the IP address of the underlying physical network interface that handles overlay networks. The example architecture uses the management interface.In the

[agent]section, enable ARP spoofing protection:[agent] ... prevent_arp_spoofing = TrueIn the

[securitygroup]section, enable security groups, enableipset, and configure the Linux bridgeiptablesfirewall driver:[securitygroup] ... enable_security_group = True enable_ipset = True firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

Configure the layer-3 agent

The Layer-3 (L3) agent provides routing and NAT services

for virtual networks.

- Edit the

/etc/neutron/l3_agent.inifile and complete the following actions:In the

[DEFAULT]section, configure the Linux bridge interface driver and external network bridge:[DEFAULT] ... interface_driver = neutron.agent.linux.interface.BridgeInterfaceDriver external_network_bridge =Note

The

external_network_bridgeoption intentionally lacks a value to enable multiple external networks on a single agent.(Optional) To assist with troubleshooting, enable verbose logging in the

[DEFAULT]section:[DEFAULT] ... verbose = True

Configure the DHCP agent

The DHCP agent

provides DHCP services for virtual networks.

Edit the

/etc/neutron/dhcp_agent.inifile and complete the following actions:In the

[DEFAULT]section, configure the Linux bridge interface driver, Dnsmasq DHCP driver, and enable isolated metadata so instances on public networks can access metadata over the network:[DEFAULT] ... interface_driver = neutron.agent.linux.interface.BridgeInterfaceDriver dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq enable_isolated_metadata = True(Optional) To assist with troubleshooting, enable verbose logging in the

[DEFAULT]section:[DEFAULT] ... verbose = True

Overlay networks such as VXLAN include additional packet headers that increase overhead and decrease space available for the payload or user data. Without knowledge of the virtual network infrastructure, instances attempt to send packets using the default Ethernet

maximum transmission unit (MTU)of 1500 bytes.Internet protocol (IP)networks contain thepath MTU discovery (PMTUD)mechanism to detect end-to-end MTU and adjust packet size accordingly. However, some operating systems and networks block or otherwise lack support for PMTUD causing performance degradation or connectivity failure.Ideally, you can prevent these problems by enabling

jumbo frames <jumbo frame>on the physical network that contains your tenant virtual networks. Jumbo frames support MTUs up to approximately 9000 bytes which negates the impact of VXLAN overhead on virtual networks. However, many network devices lack support for jumbo frames and OpenStack administrators often lack control over network infrastructure. Given the latter complications, you can also prevent MTU problems by reducing the instance MTU to account for VXLAN overhead. Determining the proper MTU value often takes experimentation, but 1450 bytes works in most environments. You can configure the DHCP server that assigns IP addresses to your instances to also adjust the MTU.Note

Some cloud images ignore the DHCP MTU option in which case you should configure it using metadata, a script, or other suitable method.

In the

[DEFAULT]section, enable thednsmasqconfiguration file:[DEFAULT] ... dnsmasq_config_file = /etc/neutron/dnsmasq-neutron.confCreate and edit the

/etc/neutron/dnsmasq-neutron.conffile to enable the DHCP MTU option (26) and configure it to 1450 bytes:dhcp-option-force=26,1450

Return to Networking controller node configuration

<neutron-controller-metadata-agent>.