Typo fix for neutron-compute-node.rst prequisite -> prerequisite Change-Id: I119c3f886d0cf110974250112bcc46ea940f509c Closes-Bug: #1490755

12 KiB

Install and configure compute node

The compute node handles connectivity and security groups <security

group> for instances.

To configure prerequisites

Before you install and configure OpenStack Networking, you must configure certain kernel networking parameters.

Edit the

/etc/sysctl.conffile to contain the following parameters:net.ipv4.conf.all.rp_filter=0 net.ipv4.conf.default.rp_filter=0 net.bridge.bridge-nf-call-iptables=1 net.bridge.bridge-nf-call-ip6tables=1Implement the changes:

# sysctl -p

ubuntu or rdo or obs

To install the Networking components

ubuntu

# apt-get install neutron-plugin-ml2 neutron-plugin-openvswitch-agentrdo

# yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-openvswitchobs

# zypper install --no-recommends openstack-neutron-openvswitch-agent ipsetNote

SUSE does not use a separate ML2 plug-in package.

debian

To install and configure the Networking components

# apt-get install neutron-plugin-openvswitch-agent openvswitch-datapath-dkmsNote

Debian does not use a separate ML2 plug-in package.

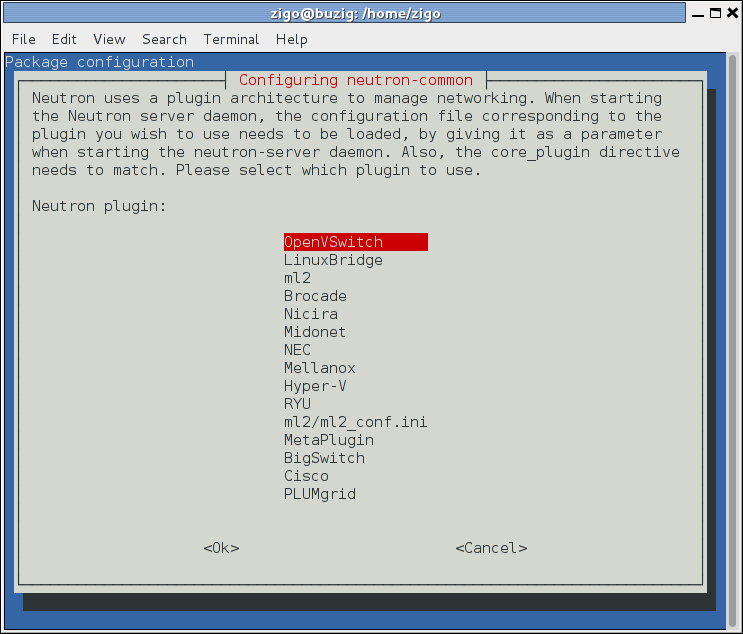

Respond to prompts for

database management,Identity service credentials,service endpoint, andmessage queue credentials.Select the ML2 plug-in:

Note

Selecting the ML2 plug-in also populates the

service_pluginsandallow_overlapping_ipsoptions in the/etc/neutron/neutron.conffile with the appropriate values.

ubuntu or rdo or obs

To configure the Networking common components

The Networking common component configuration includes the authentication mechanism, message queue, and plug-in.

Note

Default configuration files vary by distribution. You might need to add these sections and options rather than modifying existing sections and options. Also, an ellipsis (...) in the configuration snippets indicates potential default configuration options that you should retain.

Open the

/etc/neutron/neutron.conffile and edit the[database]section. Comment out anyconnectionoptions because compute nodes do not directly access the database.In the

[DEFAULT]and[oslo_messaging_rabbit]sections, configure RabbitMQ message queue access:[DEFAULT] ... rpc_backend = rabbit [oslo_messaging_rabbit] ... rabbit_host = controller rabbit_userid = openstack rabbit_password = RABBIT_PASSReplace

RABBIT_PASSwith the password you chose for theopenstackaccount in RabbitMQ.In the

[DEFAULT]and[keystone_authtoken]sections, configure Identity service access:[DEFAULT] ... auth_strategy = keystone [keystone_authtoken] ... auth_uri = http://controller:5000 auth_url = http://controller:35357 auth_plugin = password project_domain_id = default user_domain_id = default project_name = service username = neutron password = NEUTRON_PASSReplace

NEUTRON_PASSwith the password you chose for theneutronuser in the Identity service.Note

Comment out or remove any other options in the

[keystone_authtoken]section.In the

[DEFAULT]section, enable the Modular Layer 2 (ML2) plug-in, router service, and overlapping IP addresses:[DEFAULT] ... core_plugin = ml2 service_plugins = router allow_overlapping_ips = True(Optional) To assist with troubleshooting, enable verbose logging in the

[DEFAULT]section:[DEFAULT] ... verbose = True

To configure the Modular Layer 2 (ML2) plug-in

The ML2 plug-in uses the Open vSwitch (OVS) mechanism (agent) to build the virtual networking framework for instances.

Open the

/etc/neutron/plugins/ml2/ml2_conf.inifile and edit the[ml2]section. Enable theflat <flat network>,VLAN <VLAN network>,generic routing encapsulation (GRE), andvirtual extensible LAN (VXLAN)network type drivers, GRE tenant networks, and the OVS mechanism driver:[ml2] ... type_drivers = flat,vlan,gre,vxlan tenant_network_types = gre mechanism_drivers = openvswitchIn the

[ml2_type_gre]section, configure the tunnel identifier (id) range:[ml2_type_gre] ... tunnel_id_ranges = 1:1000In the

[securitygroup]section, enable security groups, enableipset, and configure the OVSiptablesfirewall driver:[securitygroup] ... enable_security_group = True enable_ipset = True firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriverIn the

[ovs]section, enable tunnels and configure the local tunnel endpoint:[ovs] ... local_ip = INSTANCE_TUNNELS_INTERFACE_IP_ADDRESSReplace

INSTANCE_TUNNELS_INTERFACE_IP_ADDRESSwith the IP address of the instance tunnels network interface on your compute node.In the

[agent]section, enable GRE tunnels:[agent] ... tunnel_types = gre

To configure the Open vSwitch (OVS) service

The OVS service provides the underlying virtual networking framework for instances.

rdo or obs

Start the OVS service and configure it to start when the system boots:

# systemctl enable openvswitch.service

# systemctl start openvswitch.serviceubuntu or debian

Restart the OVS service:

# service openvswitch-switch restartTo configure Compute to use Networking

By default, distribution packages configure Compute to use legacy networking. You must reconfigure Compute to manage networks through Networking.

Open the

/etc/nova/nova.conffile and edit the[DEFAULT]section. Configure theAPIs <API>and drivers:[DEFAULT] ... network_api_class = nova.network.neutronv2.api.API security_group_api = neutron linuxnet_interface_driver = nova.network.linux_net.LinuxOVSInterfaceDriver firewall_driver = nova.virt.firewall.NoopFirewallDriverNote

By default, Compute uses an internal firewall service. Since Networking includes a firewall service, you must disable the Compute firewall service by using the

nova.virt.firewall.NoopFirewallDriverfirewall driver.In the

[neutron]section, configure access parameters:[neutron] ... url = http://controller:9696 auth_strategy = keystone admin_auth_url = http://controller:35357/v2.0 admin_tenant_name = service admin_username = neutron admin_password = NEUTRON_PASSReplace

NEUTRON_PASSwith the password you chose for theneutronuser in the Identity service.

To finalize the installation

rdo

The Networking service initialization scripts expect a symbolic link

/etc/neutron/plugin.inipointing to the ML2 plug-in configuration file,/etc/neutron/plugins/ml2/ml2_conf.ini. If this symbolic link does not exist, create it using the following command:# ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.iniDue to a packaging bug, the Open vSwitch agent initialization script explicitly looks for the Open vSwitch plug-in configuration file rather than a symbolic link

/etc/neutron/plugin.inipointing to the ML2 plug-in configuration file. Run the following commands to resolve this issue:# cp /usr/lib/systemd/system/neutron-openvswitch-agent.service \ /usr/lib/systemd/system/neutron-openvswitch-agent.service.orig # sed -i 's,plugins/openvswitch/ovs_neutron_plugin.ini,plugin.ini,g' \ /usr/lib/systemd/system/neutron-openvswitch-agent.serviceRestart the Compute service:

# systemctl restart openstack-nova-compute.serviceStart the Open vSwitch (OVS) agent and configure it to start when the system boots:

# systemctl enable neutron-openvswitch-agent.service # systemctl start neutron-openvswitch-agent.service

obs

The Networking service initialization scripts expect the variable

NEUTRON_PLUGIN_CONFin the/etc/sysconfig/neutronfile to reference the ML2 plug-in configuration file. Edit the/etc/sysconfig/neutronfile and add the following:NEUTRON_PLUGIN_CONF="/etc/neutron/plugins/ml2/ml2_conf.ini"Restart the Compute service:

# systemctl restart openstack-nova-compute.serviceStart the Open vSwitch (OVS) agent and configure it to start when the system boots:

# systemctl enable openstack-neutron-openvswitch-agent.service # systemctl start openstack-neutron-openvswitch-agent.service

ubuntu or debian

Restart the Compute service:

# service nova-compute restartRestart the Open vSwitch (OVS) agent:

# service neutron-plugin-openvswitch-agent restart

Verify operation

Perform the following commands on the controller node:

Source the

admincredentials to gain access to admin-only CLI commands:$ source admin-openrc.shList agents to verify successful launch of the neutron agents:

$ neutron agent-list +------+--------------------+----------+-------+----------------+---------------------------+ | id | agent_type | host | alive | admin_state_up | binary | +------+--------------------+----------+-------+----------------+---------------------------+ |302...| Metadata agent | network | :-) | True | neutron-metadata-agent | |4bd...| Open vSwitch agent | network | :-) | True | neutron-openvswitch-agent | |756...| L3 agent | network | :-) | True | neutron-l3-agent | |9c4...| DHCP agent | network | :-) | True | neutron-dhcp-agent | |a5a...| Open vSwitch agent | compute1 | :-) | True | neutron-openvswitch-agent | +------+--------------------+----------+-------+----------------+---------------------------+This output should indicate four agents alive on the network node and one agent alive on the compute node.